Use the Best Data Format

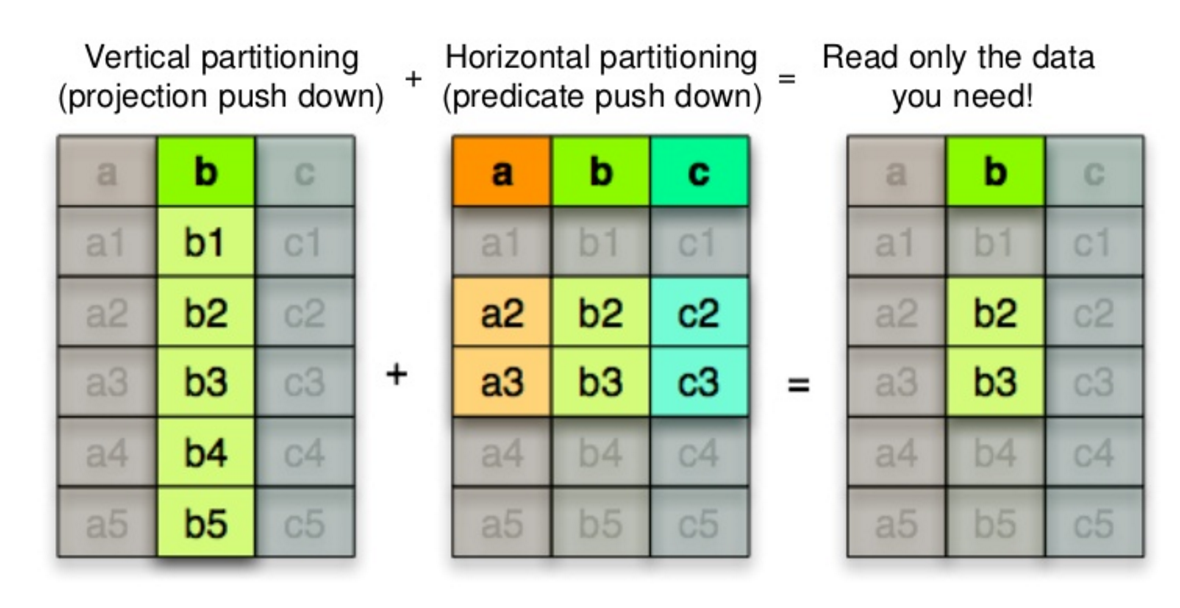

Apache Spark supports several data formats, including CSV, JSON, ORC, and Parquet, but just because Spark supports a given data storage or format doesn’t mean you’ll get the same performance with all of them. Parquet is a columnar storage format designed to only select data from columns that we actually are using, skipping over those that are not requested. This format reduces the size of the files dramatically and makes the Spark SQL query more efficient. The following picture from [24] about parquet file, explains what we could achieve with Column Pruning (projection push down) and Predicate Push Down:

As an example, we can imagine having a vehicle dataset containing information like:

{

"id": "AA-BB-00-77",

"type": "truck",

"origin": "ATL",

"destination": "LGA",

"depdelay": 0.0,

"arrdelay": 0.0,

"distance": 762.0

}Here is the code to persist a vehicles DataFrame as a table consisting of Parquet files partitioned by the destination column:

Below is the resulting directory structure as shown by a Hadoop list files command:

Given a table of vehicles containing the information as above, using the Column pruning technique if the table has 7 columns, but in the query, we list only 2, the other 5 will not be read from disk. Predicate pushdown is a performance optimization that limits with what values will be scanned and not what columns. So, if you apply a filter on column destination to only return records with value BOS, the predicate push down will make parquet read-only blocks that may contain values BOS. So, improve performance by allowing Spark to only read a subset of the directories and files. For example, the following query reads only the files in the destination=BOS partition directory in order to query the average arrival delay for vehicles destination to Boston:

You can see the physical plan for a DataFrame calling the explain method as follow:

Here in PartitionFilters, we can see partition filter push down, which means that the destination=BOS filter is pushed down into the Parquet file scan. This minimizes the files and data scanned and reduces the amount of data passed back to the Spark engine for the aggregation average on the arrival delay.

Last updated