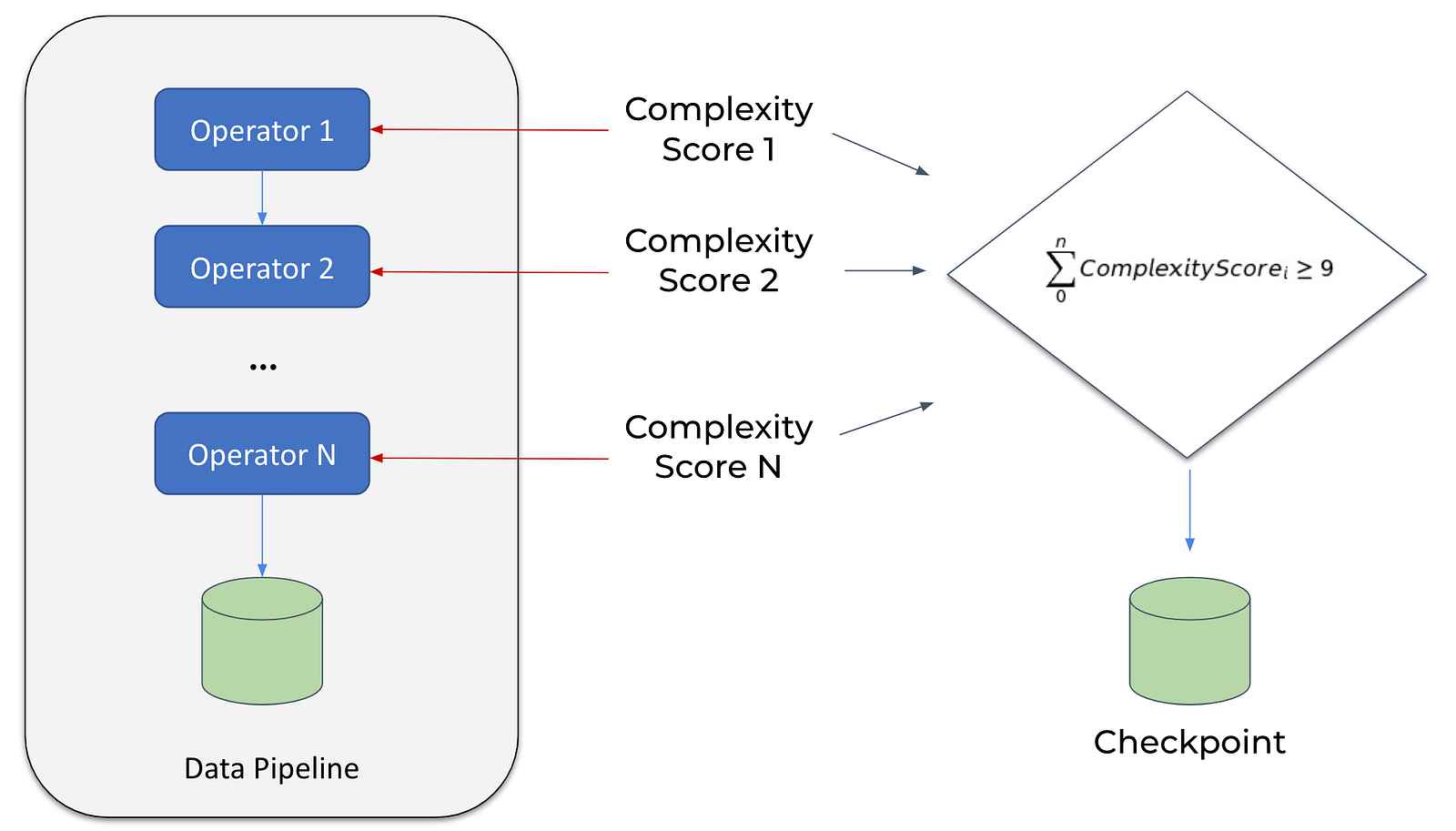

Cache Judiciously and use Checkpointing

== Physical Plan ==

TakeOrderedAndProject(limit=1001,

orderBy=[avg(arrdelay)#304 DESC NULLS LAST],

output=[src#157,dst#149,avg(arrdelay)#314])

+- *(2) HashAggregate(keys=[destination#157, arrdelay#149],

functions=[avg(arrdelay#152)],

output=[destination#157, avg(arrdelay)#304])

+- Exchange hashpartitioning(destination#157, 200)

+- *(1) HashAggregate(keys=[destination#157],

functions=[partial_avg(arrdelay#152)],

output=[destination#157, sum#321, count#322L])

+- *(1) Project[arrdelay#152, destination#157]

+- *(1) Filter (isnotnull(arrdelay#152) && (arrdelay#152 > 1.0))

+- *(1) FileScan parquet default.flights[arrdelay#152,destination#157]

Batched: true,

Format: Parquet,

Location: PrunedInMemoryFileIndex[dbfs:/data/vehicles/destination=BOS],

PartitionCount: 1,

PartitionFilters: [isnotnull(destination#157), (destination#157 = BOS)],

PushedFilters: [IsNotNull(arrdelay), GreaterThan(arrdelay,1.0)],

ReadSchema: struct<destination:string,arrdelay>

Which storage level to choose

Last updated